DevOps

DevOps unites development and operations. DevOps is the practice of breaking up monolithic architecture and teams to create smaller, autonomous teams that can build, deliver, and run applications.

Platform Engineering (PE) focuses on abstracting out infrastructure or other things that distract DevOps teams from delivering their domain. PE is a fairly new buzzword/concept and is really just a subset of DevOps.

Site Reliability Engineering (SRE) focuses on helping DevOps and internal platform teams increase reliability, scalability and security.

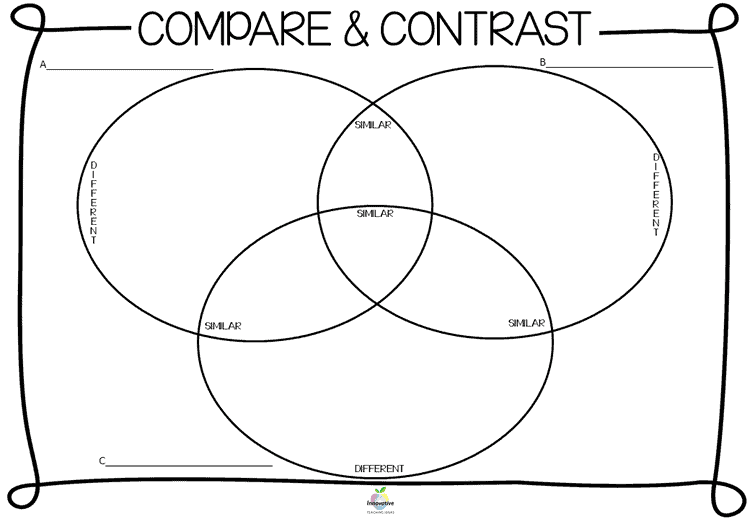

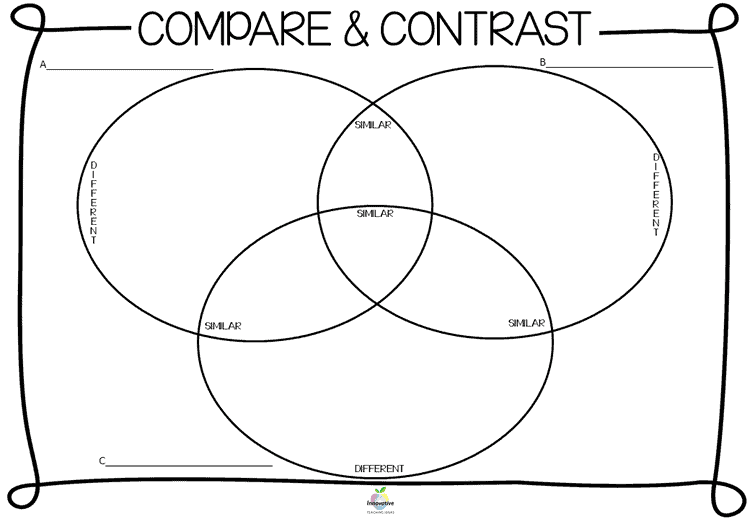

DevOps vs SRE vs PE

- DevOps focuses on the development side.

- SRE focuses on the operations side.

- PE focuses on internal development enablement and is really a part of DevOps.

SRE and Platform Engineering benefit from the three ways of DevOps:

- Concentration on increasing flow

- Tight feedback loops

- Continuous experimentation, learning and improvement

Role comparisons:

- Infrastructure Engineer – Generic term for engineers who works on core infrastructure.

- Cloud Engineer – Engineers who works on public cloud (AWS, Azure, GCP, etc).

- SRE – Software engineers who focuses on application reliability, budgeting uptime, and toil automation. Three letter terms are their friends (SLO, SLA, SLI).

- DevOps Engineer – Infrastructure engineers who focuses on reducing silo between development teams and infrastructure teams. NOTE: If your team has dedicated DevOps Engineers, your org isn’t really practicing DevOps.

- Platform Engineer – Engineer who focuses on designing and building tools and workflows that enable self-service. An enabler of software engineering teams.